GPT stands for “Generative Pre-trained Transformer”. GPT models are a family of large neural network models that use the transformer architecture, which is well-suited for natural language processing tasks.

The GPT models are built using self-supervised learning – they are trained on huge datasets of text from the internet to predict the next word in a sequence, allowing them to learn the patterns and structures of human languages. The learnings from this pre-training process can then be transferred to other natural language tasks like translation, summarization and question answering.

Origins of GPT

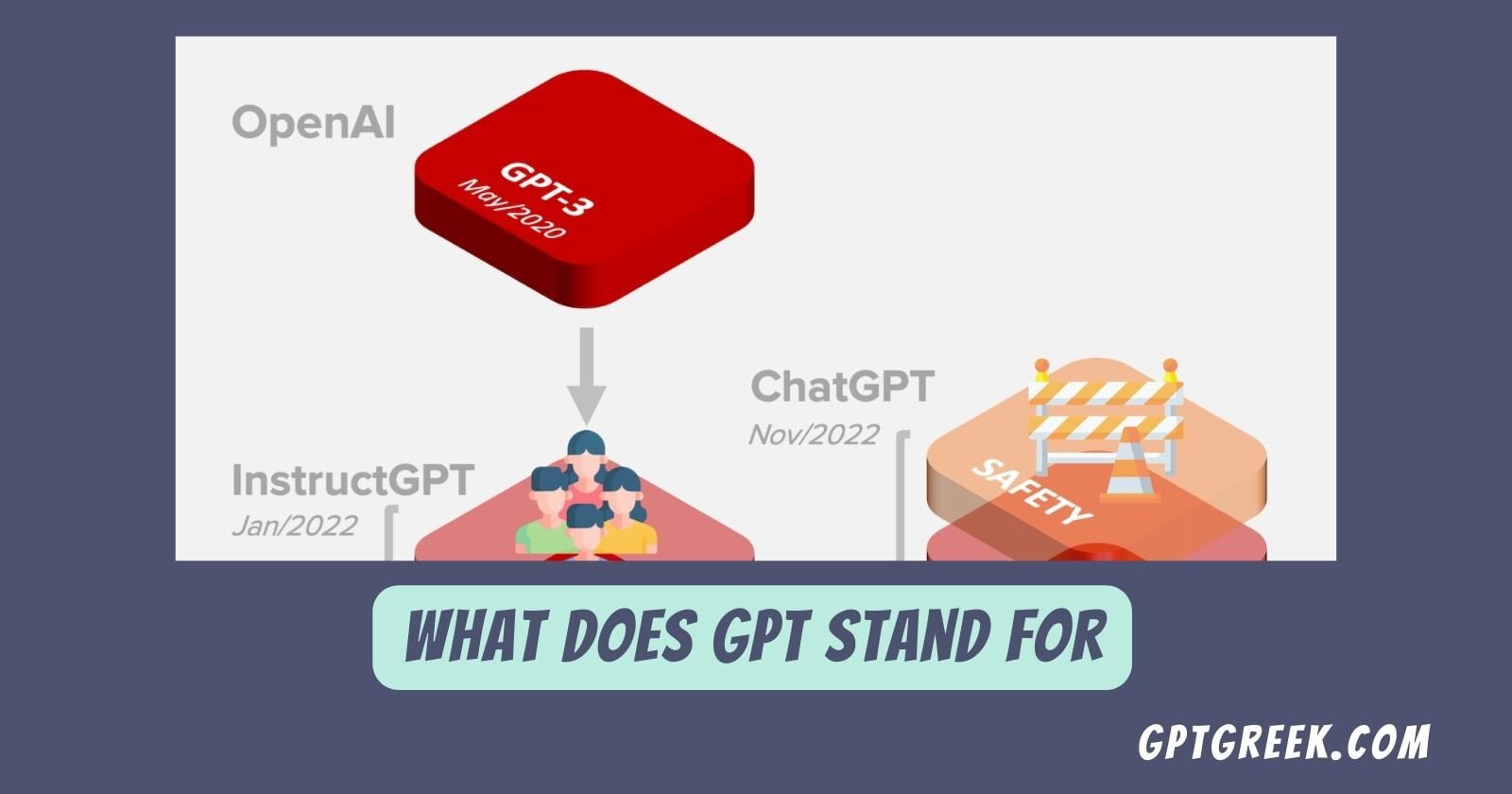

The first GPT model was introduced in 2018 by OpenAI. With 117 million parameters, it set a new benchmark for pre-trained language models.

This was followed in 2019 by GPT-2, with 1.5 billion parameters – 10 times larger than the original GPT. GPT-2 showed much stronger performance on natural language tasks like text generation.

The latest model, GPT-3, was released in 2020 and took language model capacity to a new level with 175 billion parameters, over 100 times larger than GPT-2. Let’s explore GPT-3 and its capabilities in more detail.

GPT-3 Overview

GPT-3 demonstrates extremely impressive natural language abilities:

- Text generation and completion – GPT-3 can generate coherent, human-like text and complete partial sentences with relevant, logically consistent outputs

- Translation – It can translate text between languages with higher quality than previous benchmark systems

- Question answering – The model can answer natural language questions about almost any topic, even on information it has not been explicitly trained on

- Summarization – GPT-3 can summarize longer texts, extracting key points and rewriting them concisely

This performance holds even for tasks it has not been directly optimized for, an ability described as few-shot learning or zero-shot learning:

GPT-3 requires only a few examples to successfully recognize patterns for new tasks, rather than needing extensive additional training. For example, after seeing just a single translated sentence pair, it can translate new sentences between those languages without any further training.

This ability to rapidly adapt makes GPT-3 dramatically more versatile and reusable across natural language processing applications compared to previous benchmark models.

Why Bigger Models Matter

The massive jump in GPT-3’s capabilities is largely thanks to its huge dataset and model size. As the models become bigger:

- They can capture far more information about how language works based on all the texts they are trained on

- They can learn more complex linguistic rules and structures

- Their knowledge becomes more generalizable to new tasks and topics

Bigger models can therefore unlock capabilities that smaller models simply don’t have enough information or complexity to achieve.

GPT model scale

GPT model performance improves rapidly with greater scale

In fact, GPT-3 displays a phenomenon called scalable learning – its performance on natural language tasks scales much more rapidly than linearly as model and dataset size expand.

Doubling the model capacity more than doubles its capabilities. This reveals language model performance to be a power law function of scale, implying no upper limit to how powerful these models can become.

As model scale continues to increase exponentially year over year, we can expect to see dramatic improvements in what natural language models like GPT can achieve.

GPT-3 Applications and Use Cases

GPT-3 provides a general natural language capability that allows it to be applied successfully across many domains and tasks:

Content Generation

- Automated writing for news articles, blog posts, marketing copy, essays and more

- Creative fiction writing and poetry composition

- Website and document creation by describing required content

Conversational AI

- Chatbots and virtual assistants

- Customer service automation

- Interactive gaming environments

Information Retrieval and Processing

- Search engine input understanding

- Document summarization

- Data extraction and analysis

- Expert systems for technical support

Reasoning and Problem-Solving

- Answering questions on almost any topic

- Providing expert advice in specific domains

- Logical reasoning from written descriptions

Among many more applications, it’s clear that GPT-3 has immense potential to transform workflows across nearly any industry.

Companies like Anthropic and AI21 Labs are also developing new GPT models targeting even greater performance and safety. As model scale continues to progress, alongside research to align these models with human values, GPT promises to become an increasingly multipurpose AI assistant capable of automating a large proportion of information work.

Current Limitations of GPT Models

However, while they display impressively versatile reasoning and language understanding capabilities, GPT models do have some important limitations:

- Limited real-world knowledge – GPT models have no way to directly experience or sense the world around them. Their knowledge comes only from the text they are trained on, so there are many gaps.

- Narrow skillset – They excel at language tasks but cannot perform other types of reasoning such as mathematical logic, spatial reasoning or physical intuitions.

- Brittle understanding – Since they have no grounding experience of common sense concepts, their language understanding can break down easily outside of familiar patterns.

- Bias and safety issues – As a statistical model trained on Internet text, GPT can inherit problematic biases or generate toxic outputs.

Addressing these limitations alongside increasing scale and performance is a key challenge for future research. Architectures incorporating more general knowledge and common sense reasoning abilities promise to create safer and even more capable language models.

The Future of GPT Models

GPT-3 already displays a surprisingly broad competence at language and reasoning tasks. With model scale continuing to grow exponentially, GPT models will only become more generally capable and multipurpose.

In the short term, models like GPT-3 and GPT-4 will find widespread use in information processing applications across many industries:

- GPT models are being used to build virtual assistants, chatbots and support agents for customer service roles

- Applications using GPT for content generation are creating automated news reports, marketing copy, analysis reports and more

- Educational applications are leveraging GPT for automated lesson and curriculum planning

In the longer term, the scalable nature of language model performance means capabilities should eventually cross an important threshold – artificial general intelligence, or systems capable of performing intellectual work across any domain at higher than human levels.

While current models still have a long path ahead to realize this goal fully, they provide an exciting glimpse at the potential for AI to match and eventually surpass human language understanding.

Conclusion

GPT models represent a revolutionary advance in natural language AI capabilities through scalable, self-supervised learning. GPT-3 stands as the largest and most powerful language model created to date, displaying human-like competence across a diverse range of language tasks without specialized training.

Rapidly improving abilities for text generation, reasoning, summarization and other applications make these models highly valuable for automating information work across almost any industry. With scale ever increasing, GPT promises to become an artificial general intelligence and versatile assistant exceeding human capabilities.

There remain important challenges around bias, safety and grounding understanding in real common sense. But alongside responsible development, GPT models point the way towards an AI capable of excelling across the full spectrum of intellectual work.